Apple’s latency measure

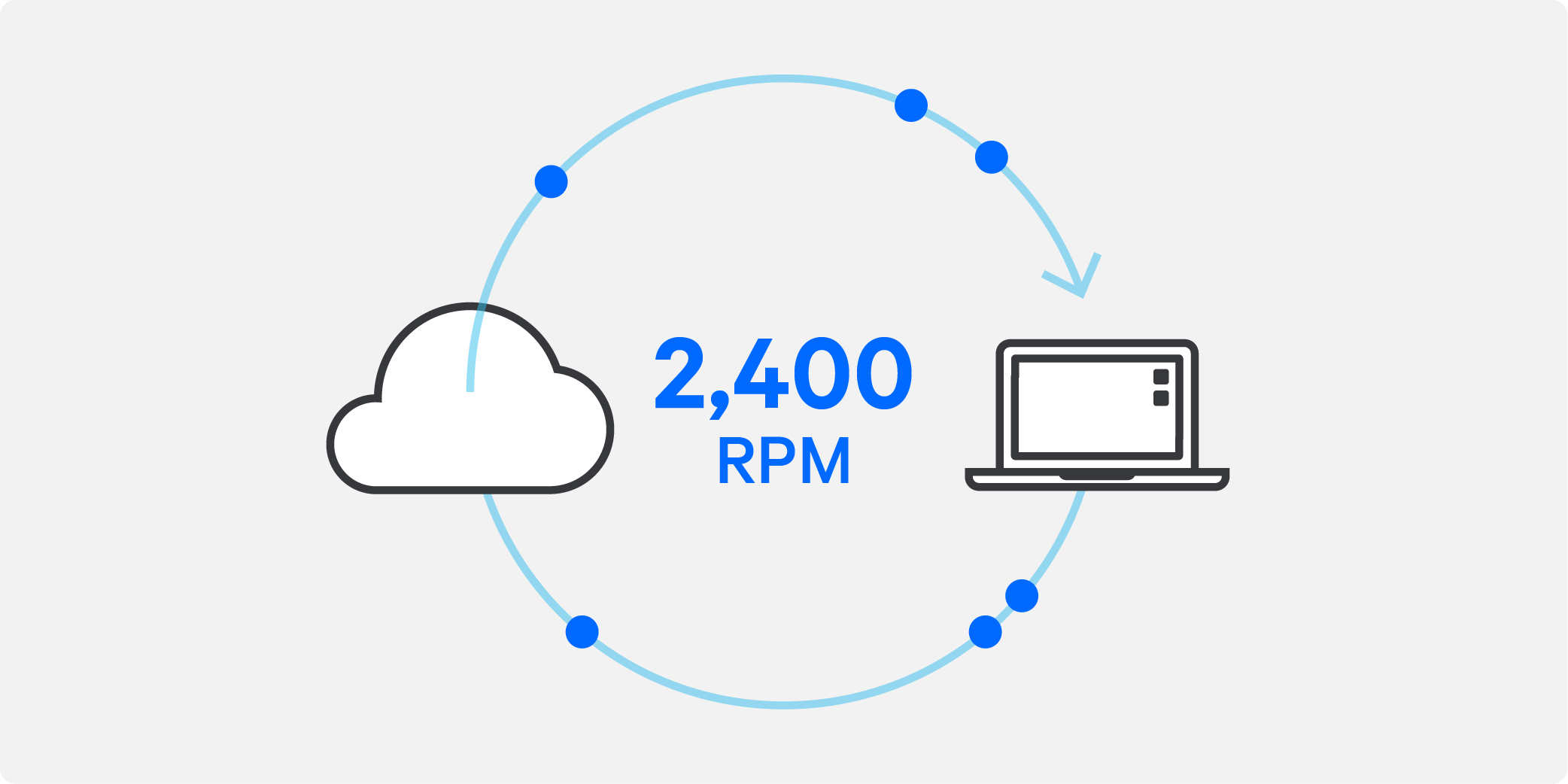

Then we come to the newly created latency metric, Apple’s RPM, which stands for round trips per minute. This works very differently to traditional latency metrics, as Ben Janoff explains. “The most important thing to know about RPM is that normally when we talk about latency we're talking in milliseconds, and the bigger the latency the worse it is. So, one millisecond is really good, five milliseconds is a bit worse, ten milliseconds is worse still.”

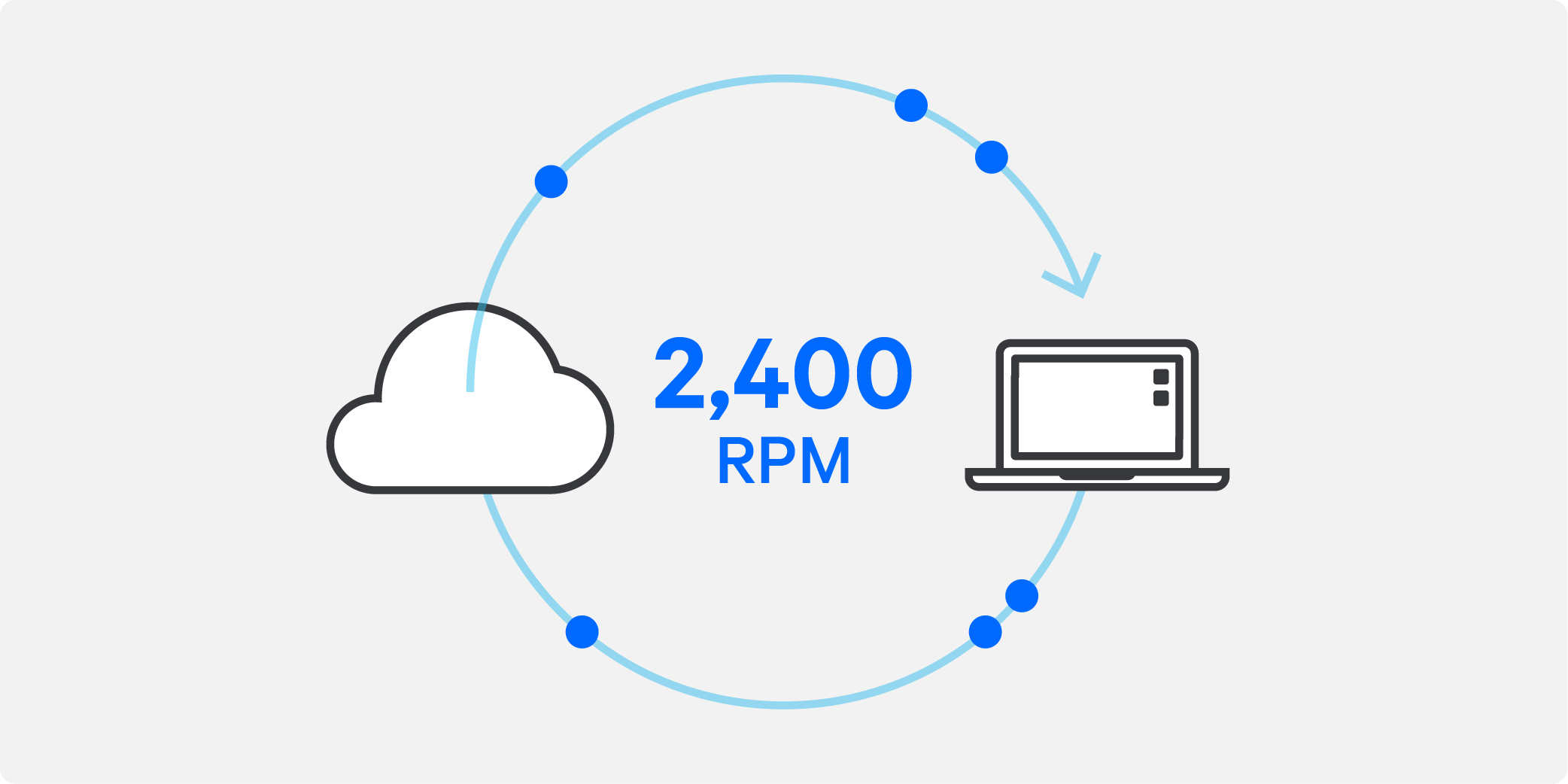

With RPM, the inverse is true: 800 RPM is much better than 200 RPM. And to give you a sense of scale, “5,000 RPM is a really great connection,” said Ben.

Comcast has been measuring RPM in its trials. Jason Livingood said he’s seen customers shoot up from less than 100 RPM to between 1,000-2,400 RPM – what he describes as a “dramatic improvement”. In the trial Comcast is also testing that same RPM test with L4S turned on in macOS, which should increase the RPMs even further.

“What's really cool about the SamKnows Whiteboxes out there that have implemented this responsiveness test is that those are all controlled, so we know they're the exact same hardware, unlike in customer homes, where there's a lot of variability – and often a Wi-Fi connection between the test client and internet,” said Jason. “So, this is a great measurement platform for us.”

RPM is also a more realistic measure of latency than a single ping, according to Ben Janoff, because it more accurately models the load on a connection in a typical home. “RPM is all about latency when the connection is being heavily used,” he explained. “When we just talk about latency, we could be talking about latency under load, or we could be talking about latency not under load. A number in milliseconds doesn't really convey any information about what else was happening on the line. But when we talk about RPM, we know that we're talking about a connection that's being used as much as possible and then we measure the latency. It allows us to see how the network is going to perform under harsher conditions that reflect real-world use.”

Ben gives a real-world scenario that RPM would accurately model. “You have a situation where mum has started a backup on her laptop and that transfer is going along quite nicely, and then upstairs the child is trying to play online video games, but their experience is really degraded by the bulk transfer happening. That's one case under which we can use RPM to measure the latency, but not only does it measure the latency of the gaming session, it also measures the latency of the bulk transfer connection.”

“This is really important for situations such as video streaming,” Ben added. “If you imagine you're watching a video streaming service, and you come to the realisation that you've already seen this part of the episode and you want to fast forward, you grab the video scrubber and you sweep it to the right. Here, the latency is really important, because you also have a bulk transfer going on. There’s a lot of video streaming data coming down from the internet to your device, but you also want it to be responsive. You want it to not take too long to chew through all the data that was already sent before you start getting to the new data that you care about at the point you moved the playback scrubber. So, RPM is very interesting because it blends latency of the traffic that is not generating the load, and the latency of the load itself.”